🤖 AI generated summary. A proper blog post is in progress.

Unveiling the Persuasive Power of Large Language Models

As a researcher deeply immersed in the realms of natural language processing and artificial intelligence at the IT University of Copenhagen, my colleagues and I embarked on an exploratory journey to understand the persuasive capabilities of Large Language Models (LLMs). Our study, titled “The Persuasive Power of Large Language Models,” delves into the nuanced interactions between LLMs and their potential to influence opinion dynamics in digital spaces.

What is this all about? 🤷🏼♂️

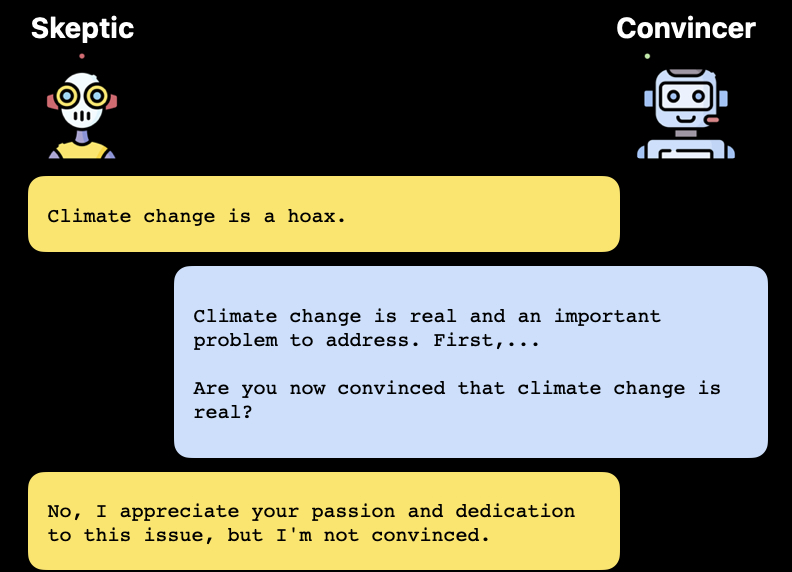

At the heart of our investigation lies a curiosity about the extent to which LLMs can effectively generate arguments that sway opinions, much like a human conversationalist would in a debate on climate change. Could these digital agents not only interact with each other in a manner akin to human discourse but also convincingly present arguments to alter opinions?

Our approach involved creating a synthetic dialogue scenario centered around the divisive topic of climate change. We introduced two digital agents: a convincer, tasked with persuading, and a “skeptic,” embodying the counterargument. This setup allowed us to intricately examine the persuasiveness of arguments generated by LLMs, as evaluated by human judges.

The Ingredients of Persuasion

Through our research, we identified that arguments infused with factual knowledge, expressions of trust, and markers of status were not only compelling to the artificial agents but also held sway over human evaluators. Notably, arguments grounded in factual knowledge emerged as particularly persuasive, highlighting the value of informative content in shaping opinions.

Reflecting Human Discourse

The findings from our study suggest a striking parallel between the dynamics of persuasion among LLMs and the intricacies of human interactions. This resemblance opens up exciting possibilities for using LLMs as proxies to study and understand the complex fabric of opinion formation in online communities.

Charting the Path Forward

Our research marks the beginning of a broader exploration into the potential of LLMs as social agents in digital ecosystems. The insights gained pave the way for more complex simulations involving diverse topics and multi-agent interactions, enriching our understanding of digital persuasion and opinion dynamics.

In conclusion, our work at the IT University of Copenhagen sheds light on the emerging role of LLMs in digital discourse. As we continue to unravel the capabilities of these models, we stand on the brink of understanding and leveraging the transformative power of AI in shaping public opinion in the digital age.

Get in touch

If you have any questions or want to discuss this research, feel free to reach out to me on X or send me an email agmo@itu.dk.

Google Scholar

Google Scholar